%20(940%20x%20788%20px)%20(2).png)

Before we get into the specifics of how ad platforms learn from your campaigns, it's worth understanding the two primary ways AI processes data: structured and unstructured learning.

Unstructured vs. Structured Learning

Imagine you're training an AI to identify different species of animals. Your dataset includes photos of dogs, cats, birds, and fish.

One approach is to let the AI explore on its own without much instruction. You don't provide labels or tell it what features define each animal. You just give it the photos and let it find patterns. This is unstructured learning.

The AI might start noticing broad characteristics: some animals have four legs, others have wings, some have whiskers. It might also pick up on the environment in each photo. Is the creature on land, in water, or perched in a tree? Based on these observations, the AI starts grouping the animals into clusters. Over time, it might correctly sort them into dogs, cats, birds, and fish.

But what happens when you introduce a photo of ducks in a pond? If all the previous bird photos showed birds in trees, the AI might put the duck in a new category entirely. It doesn't know that ducks are birds. It's just following the patterns it found. This is the challenge with unstructured learning: it's exploratory and can surface unexpected insights, but it's not always accurate.

The other approach is structured learning. Here, you label each photo upfront with the correct species name. You tell the AI what to look for. You train it to recognize that birds have wings and feathers, and that the environment in the background isn't a defining feature. You include photos of ducks and penguins so the AI understands that not all birds live in trees.

This seems like the obvious choice. Clear labels help the AI make more accurate predictions. But structured learning has its own limitations. It requires careful preparation to label the data properly. And if your labels are incomplete or biased, the AI will learn those limitations. If you never included ducks or penguins, the AI might still develop a flawed understanding of what a bird is.

How This Applies to Marketing

Google's Smart Bidding algorithms use structured learning. You feed the system labeled data: historical conversions, ad placements, keywords, audience demographics. The outputs (conversion rates, ROAS) provide the labels that train the model. From this, the AI learns to predict which combinations of inputs will drive the best results.

Structured learning excels in environments where past behavior is a good predictor of future outcomes. It can optimize based on stable, well-defined relationships between inputs and outputs. But these models are only as good as the data they've been trained on. They can struggle when conditions change or when they encounter situations that fall outside their training data.

Unstructured learning shows up in tools like Meta's Lookalike Audiences. The AI explores vast amounts of customer data without predefined labels. Browsing behaviors, purchase histories, demographics, interests. It identifies clusters and patterns that aren't obvious to human marketers, uncovering audience segments you might never have thought to target.

When Unstructured Learning Surprises You

Eric Seufert, former VP of User Acquisition at Rovio (the company behind Angry Birds), described how their Facebook advertising relied heavily on unstructured learning. When they tried to identify their most valuable customers, traditional buyer personas didn't apply. There was no clear pattern linking high-value users. They were just random groups of people who had little in common.

But when Facebook's algorithm was given the freedom to explore signals outside typical buyer personas, it successfully connected high-value customers to one another. Segmenting customers by value, then building Lookalike audiences from those cohorts, was the key to their success.

Seufert recommended a hands-off approach: let the algorithm explore the unstructured data rather than constraining it with your assumptions about who the customer should be.

This insight challenges a deeply held belief in marketing: that buyer personas are the key to effective targeting. What Seufert's experience suggests is that personas, and the manual targeting settings advertisers rely on, can sometimes be distracting or even detrimental to performance.

We've seen this pattern across industries. When we started working with Grown Brilliance, we assumed their ideal customers would be environmentally conscious or budget-conscious. Instead, we found customers from all walks of life. Different interests, socioeconomic backgrounds, and age groups. Some shopped at Walmart, others at Gucci. Some drove electric vehicles, others drove Ford F-150s. Very few fit the buyer persona we had initially envisioned.

Unstructured learning allows Google and Meta to consider thousands or millions of potential signals to understand which common behaviors are actually relevant to our customer base.

Liquidity and Campaign Consolidation

In a 2019 paper, the Interactive Advertising Bureau defined liquidity this way: "In a fluid marketplace, machine learning helps to identify the most valuable impressions. When every dollar is allowed to flow to the most valuable impression, we call this condition 'liquidity,' and it is made possible when humans take their hands off the controls and allow the system to read the terrain."

The opposite of a liquid environment is a restricted one. Every time you add a constraint to your campaigns, whether it's a narrow audience, a limited placement, a segmented budget, or a rigid bid cap, you reduce the algorithm's ability to learn and optimize.

There are four dimensions of liquidity:

Placement liquidity refers to where your ads can appear. A negative mobile bid adjustment restricts placement liquidity. So does a campaign that hasn't opted into automatic placements. The more places the system can show your ads, the more opportunities it has to find valuable impressions.

Audience liquidity is about who sees your ads. Broad match keywords increase audience liquidity. So does enabling audience expansion. The narrower your targeting, the fewer people the algorithm can learn from.

Budget liquidity means removing artificial constraints on how your budget is allocated. When you split spend across many small campaigns with individual budgets, you starve each one of the data it needs. Consolidating campaigns and using shared budgets or campaign budget optimization gives the algorithm more room to shift spend toward what's working.

Creative liquidity is achieved when you let the system test and choose the best-performing creative. Running multiple ad variations and using responsive formats allows the algorithm to learn which messages resonate with which audiences.

Why This Matters

The IAB paper put it plainly: "In every case, allowing machine learning algorithms to process as much data as possible improves liquidity. By removing restrictions on a campaign, media teams can test more opportunities simultaneously and ensure budgets are applied to find the right people and produce the most cost-efficient results."

In some ways, Join or Die is an entire book dedicated to the benefits of campaign liquidity. All things equal, a liquid-environment outperforms.

This is why Google and Meta push advertisers toward consolidated campaign structures and broader targeting. It's not arbitrary or a trick to get you to spend more. These platforms are built on structured learning systems that need sufficient data volume to exit the learning phase and make confident predictions. Fragmented campaigns with narrow targeting and small budgets starve the algorithm of the information it needs.

The Learning Phase

If you've run campaigns on Google or Meta, you've seen the phrase "learning phase" in your dashboard. It's easy to treat this as a temporary inconvenience, something to wait out before performance stabilizes. But understanding what's actually happening during this phase changes how you work with these systems.

The learning phase is the period when an algorithm is actively gathering data, testing hypotheses, and figuring out which combinations of inputs drive the best outcomes. It's exploration mode. The system doesn't yet know which audiences, placements, bids, or creative variations will perform best for your specific goals. So it experiments.

During this phase, performance is volatile. This isn't a sign that something is broken. It's the algorithm doing exactly what it's supposed to do: gathering enough information to make confident predictions.

The Plinko Analogy

A useful way to visualize this is the game of Plinko from The Price Is Right. A contestant drops a disc from the top of a board covered in pegs. The disc bounces unpredictably as it falls, eventually landing in a slot at the bottom that corresponds to a prize. You have some control over where you drop the disc, but you can't predict exactly where it will land because each peg alters its path.

Launching a campaign is like dropping that disc. You've set your initial parameters: audience targeting, bids, creative, budget. But from there, the algorithm bounces through data signals, testing different combinations, adjusting based on feedback. Each "peg" it hits represents a different factor: user behavior, ad performance, competitive bids, time of day, device type. The algorithm doesn't know the best path from the start. It has to discover it through exploration.

Eventually, the disc lands in a slot. The algorithm identifies a combination of factors that seems to deliver good results, and it exits the learning phase. Now it shifts from exploration to exploitation, focusing on what it believes works rather than continuing to test broadly.

The Catch: Local vs. Global Optimum

Here's where it gets interesting. The slot the algorithm lands in might not be the best slot available. It might just be the best slot it found on this particular path.

In machine learning, we distinguish between a local optimum and a global optimum. A local optimum is the best outcome based on the data the algorithm has processed so far. A global optimum is the best possible outcome across all potential paths. The algorithm might have landed in a decent slot, but if it had hit different pegs during the learning phase, it could have found something better.

Once the algorithm exits the learning phase, it becomes more conservative and focuses on optimizing around what it already knows. This is efficient, but it also means the system won't actively seek out better opportunities unless you prompt it to.

This is why going back into the learning phase is sometimes necessary. Conditions change. New competitors enter the market. Consumer behavior shifts. Creative gets stale. If your algorithm is stuck optimizing around signals that no longer reflect reality, it won't adapt on its own.

Resetting the learning phase is like dropping a new Plinko disc. You reintroduce volatility and uncertainty, but you also open the possibility of landing in a better slot. The algorithm gets to explore new paths, encounter new data signals, and potentially find strategies that outperform the current one.

This isn't something to do constantly. Frequent resets prevent the algorithm from ever building confidence. But periodic resets, especially when market conditions change or performance plateaus, can unlock improvements that incremental optimization never would.

Exploration vs. Exploitation

The learning phase represents the tension between exploration and exploitation that runs through all of machine learning.

Exploration is about gathering information. Trying new things. Accepting short-term inefficiency in exchange for learning. Exploitation is about acting on what you've learned. Maximizing returns based on current knowledge. Both are necessary, but they pull in opposite directions.

An algorithm stuck in exploration never delivers consistent results. An algorithm stuck in exploitation never discovers better strategies. The art is knowing when to push the system back into exploration and when to let it exploit what it knows.

For marketers, this means resisting the urge to judge campaigns too quickly during the learning phase, while also recognizing when a campaign has become too set in its ways. The goal isn't to avoid the learning phase. It's to use it strategically.

Confidence vs. Accuracy

As the algorithm gathers data and exits the learning phase, it becomes more confident in its predictions. But confidence and accuracy are not the same thing. A confident AI may not always be accurate, and an accurate AI may lack the confidence to scale. Understanding the relationship between these two factors is critical for optimizing campaigns and avoiding costly mistakes.

Confidence refers to the probability the algorithm assigns to a particular outcome. It's the system's internal gauge of how likely it believes its predictions are correct. Accuracy reflects how well the algorithm actually predicts outcomes. These two factors don't always move in sync, and this mismatch can lead to a variety of results, from campaign success to outright failure.

Imagine an algorithm predicts that the probability of a conversion is 50%. But is that probability based on having seen enough data to confidently state the event happens half the time? Or is it simply the result of not having enough examples to make a more informed prediction? In both cases, the AI might claim "50%," but in one scenario it's confident, and in the other it's guessing.

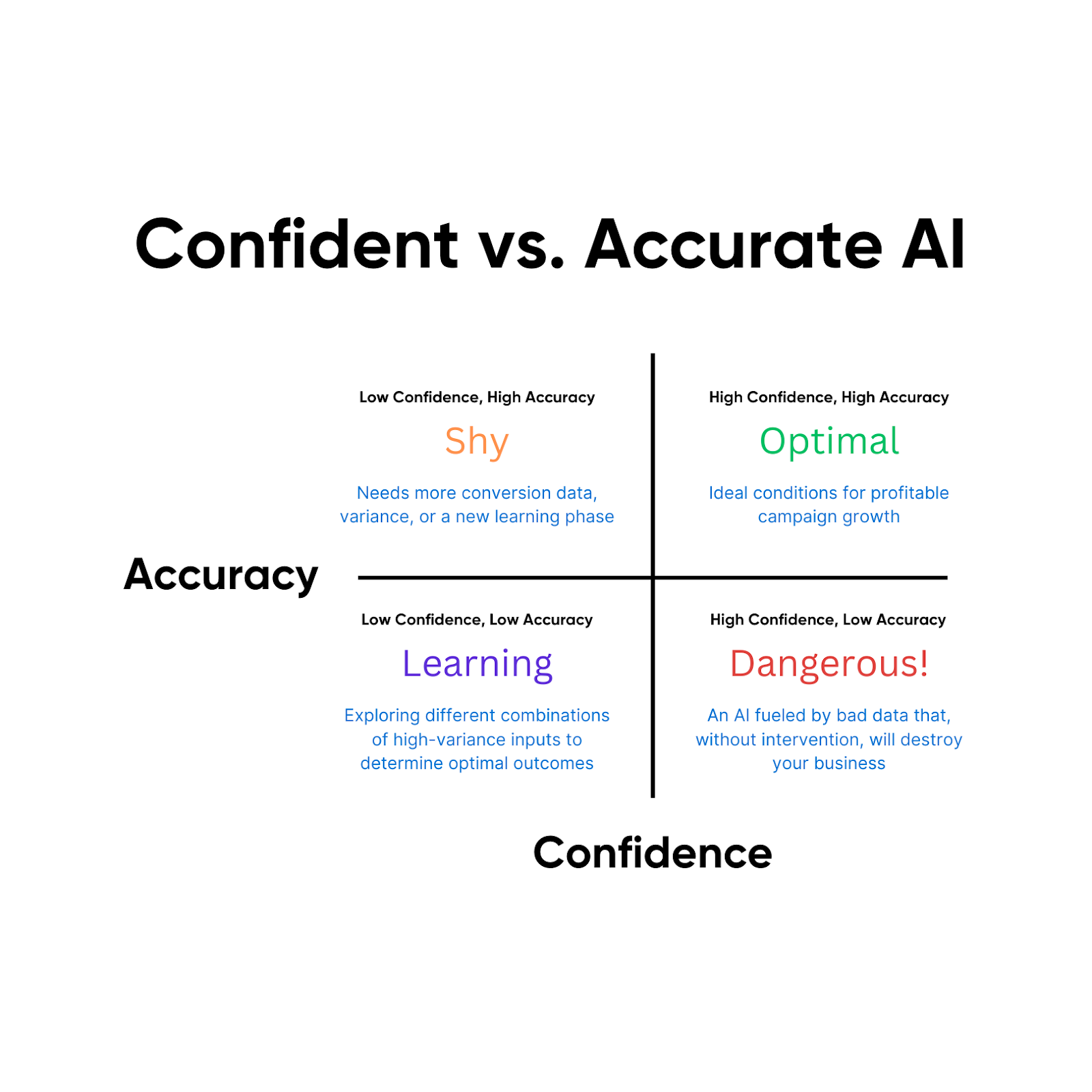

The Four Quadrants

To understand how confidence and accuracy interact, it helps to visualize them on a matrix with confidence on one axis and accuracy on the other.

Low Confidence, Low Accuracy: The Learning AI

This is an algorithm still in the learning phase. It's exploring various combinations of inputs, analyzing high-variance data, trying to understand which levers drive results. At this stage, the system is neither confident in its predictions nor accurate in its results. Performance is volatile. This stage is necessary, but marketers need to be patient and let the algorithm gather enough data to move forward.

High Accuracy, Low Confidence: The Shy AI

This is an algorithm that has learned which combinations work, but doesn't yet have the confidence or data volume to scale those insights. You'll often see this with campaigns that plateau. You increase the budget, but the platform continues to spend at the same rate. The AI isn't failing. It's hesitant. It hasn't explored enough auctions or gathered enough conversion data to feel secure about expanding. If you want to overcome this plateau, you may need to feed the algorithm more data or reintroduce variance by resetting the learning phase.

High Confidence, High Accuracy: The Optimal AI

This is the goal. The algorithm has gathered enough data to accurately predict which strategies produce the best results, and it's confident enough to act on those predictions at scale. It can enter new auctions, adjust bids, and maintain efficiency as budget increases. This is where most advertisers want to be.

High Confidence, Low Accuracy: The Dangerous AI

This is the most dangerous quadrant. The algorithm believes it has figured things out. It's confident in its predictions. But in reality, it's making decisions based on flawed or insufficient data.

Consider a scenario where your conversion tracking pixel is placed on the "add to cart" page instead of the "purchase confirmation" page. The AI would confidently optimize for users who reach the cart, but it would have no understanding of which users actually complete a purchase. It's confidently driving conversions, just not the conversions you care about. Left unchecked, this overconfidence can drain your budget and derail your business objectives.

This is why monitoring the data you feed the algorithm matters so much. Inaccurate data leads to false confidence, and a "dangerous AI" can cause significant harm if allowed to operate unchecked. In these cases, the best course of action is to reset the learning phase, clear the flawed data, and retrain on accurate signals.

The Breakdown Effect

This brings us to one of the most misunderstood dynamics in Meta advertising.

A campaign runs across two placements. At the end of the flight, one placement shows a $15 CPA, the other shows $25. But Meta allocated 80% of the budget to the $25 placement. Many advertisers see this and conclude the system made a mistake.

It probably didn't.

Meta's delivery system uses discount pacing. It front-loads the cheapest conversions early in your budget cycle, then gradually moves into more expensive opportunities. Every ad, ad set, and placement has a ceiling: a point where the next conversion costs more than it would somewhere else. The algorithm is constantly calculating where the next cheapest conversion lives across your entire campaign.

That $15 CPA placement might be exhausted. The cheap conversions have been captured and the next conversion from that placement might cost $40. Meanwhile, the $25 placement still has room to run. Meta's system sees this, but your dashboard doesn't show it.

This is the breakdown effect. The system optimizes for the best aggregate outcome, not the best-looking individual line item. When you pause a placement because its average CPA looks worse, you often force spend into a more expensive path.

Therefore, it’s crucial to evaluate performance at the campaign level, not the component level. The algorithm is making tradeoffs you can't see in a breakdown report.

Strategy Still Comes First

Everything in this chapter represents best practices for training ad platform AI to optimize more effectively toward your goals. Understand how structured and unstructured learning work. Give the algorithm liquidity. Let the learning phase run its course. Know the difference between confidence and accuracy. Don't overreact to the breakdown effect.

These principles matter. They're how you win at the margin.

But in true "Never Always, Never Never" fashion, there are exceptions to all of them. More importantly, none of these optimizations will overcome bad strategy.

Many advertisers have become obsessed with resetting learning phases, convinced that one more roll of the Plinko disc will unlock a cohort of truly profitable customers. They tweak audience settings, restructure campaigns, test new bid strategies, all in pursuit of some hidden configuration that will finally make the math work. This is a futile effort. If the underlying strategy is flawed, no amount of platform optimization will save it.

Chasing profitability and growth exclusively through channel tactics is not a sustainable way to build a brand. The platforms are powerful, but they're tools. They amplify whatever you feed them. Feed them a weak value proposition, undifferentiated creative, or a product nobody wants, and they'll efficiently spend your budget proving that out.

This chapter is the first time we've gone deep on tactics. It matters because the details matter. But it's worth grounding this clearly: platform optimization is always secondary to developing real marketing strategy. Get the strategy right first. Then let the machines do what they're good at.

Request A Marketing Proposal

We'll get back to you within a day to schedule a quick strategy call. We can also communicate over email if that's easier for you.

Visit Us

New York

1074 Broadway

Woodmere, NY

Philadelphia

1429 Walnut Street

Philadelphia, PA

Florida

433 Plaza Real

Boca Raton, FL

General Inquiries

info@adventureppc.com

(516) 218-3722

AdVenture Education

Over 300,000 marketers from around the world have leveled up their skillset with AdVenture premium and free resources. Whether you're a CMO or a new student of digital marketing, there's something here for you.

OUR BOOK

We wrote the #1 bestselling book on performance advertising

Named one of the most important advertising books of all time.

buy on amazon

OUR EVENT

DOLAH '24.

Stream Now.

Over ten hours of lectures and workshops from our DOLAH Conference, themed: "Marketing Solutions for the AI Revolution"

check out dolah

The AdVenture Academy

Resources, guides, and courses for digital marketers, CMOs, and students. Brought to you by the agency chosen by Google to train Google's top Premier Partner Agencies.

Bundles & All Access Pass

Over 100 hours of video training and 60+ downloadable resources

view bundles →

view bundles →

.svg)

.webp)

.png)